Last year, I started to get curious about the system that South Carolina uses to publish campaign finance disclosures.

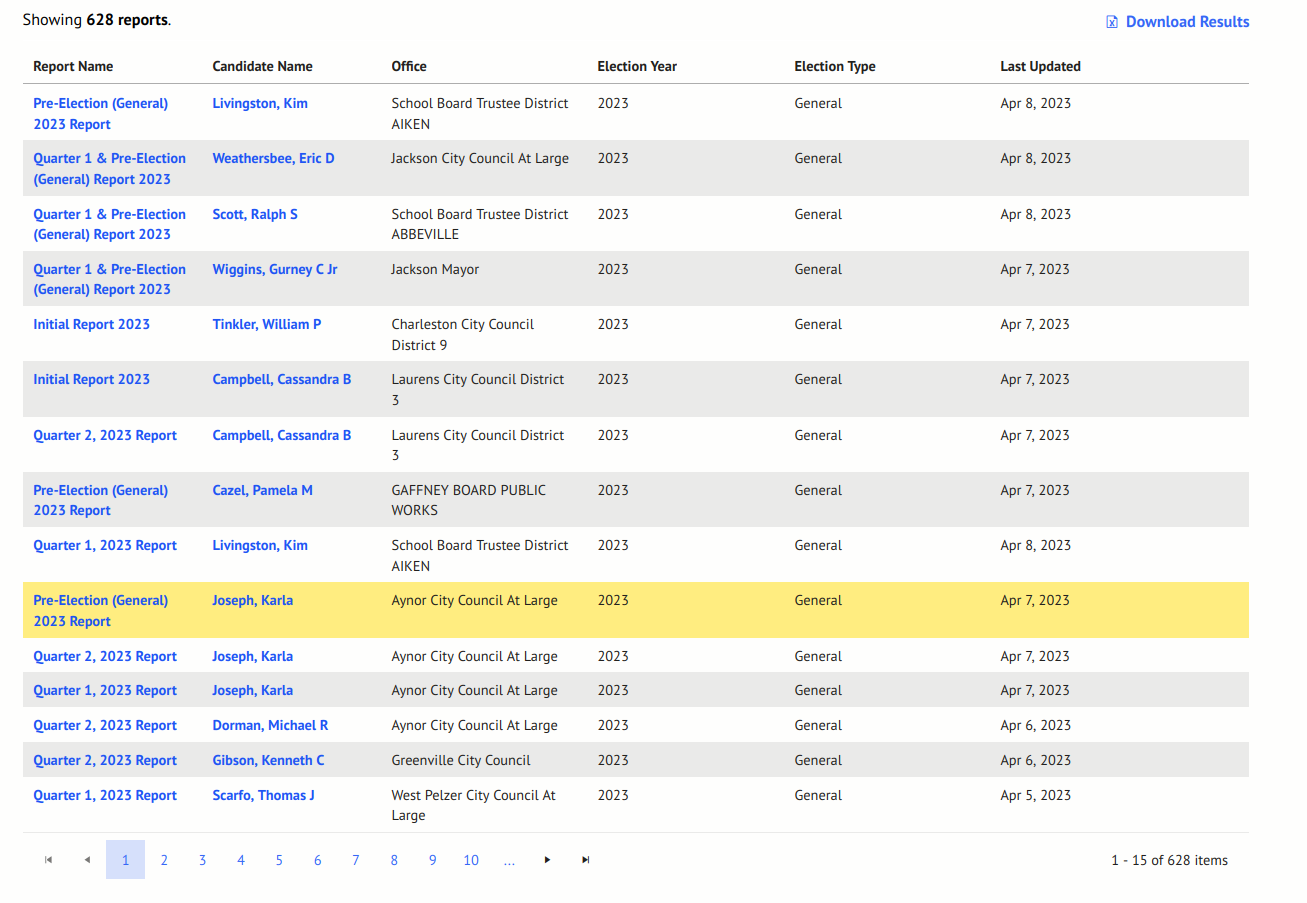

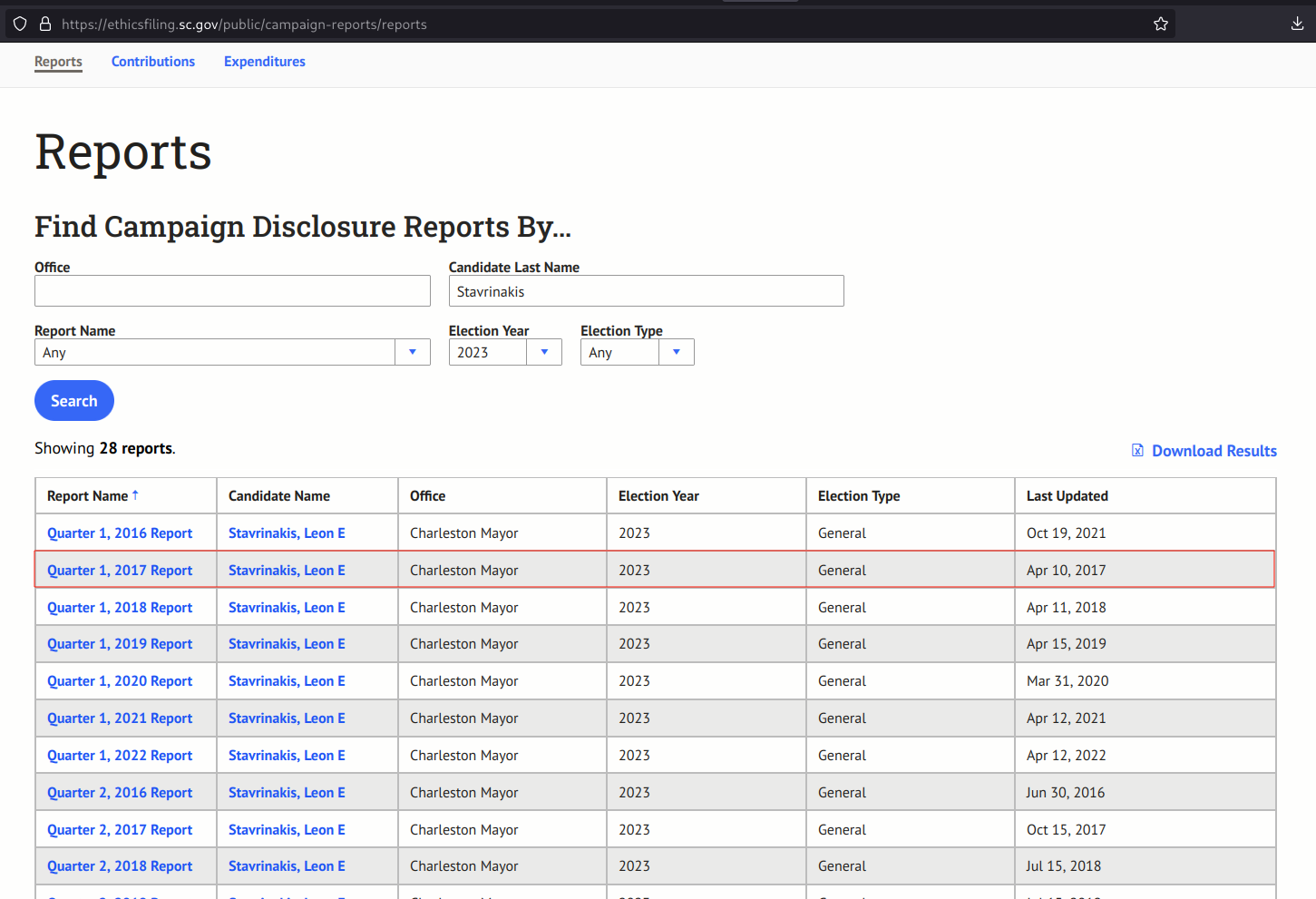

The first thing I noticed was that the system is really irritating to work with. I wanted a list of all candidates that had filed a report for the elections in 2023 with links to each report. The system will actually let you generate that list and display it, as of this writing, there are currently 42 pages of reports.

You might notice the "Download Results" button in the top of that screenshot, so let's see what that gets us:

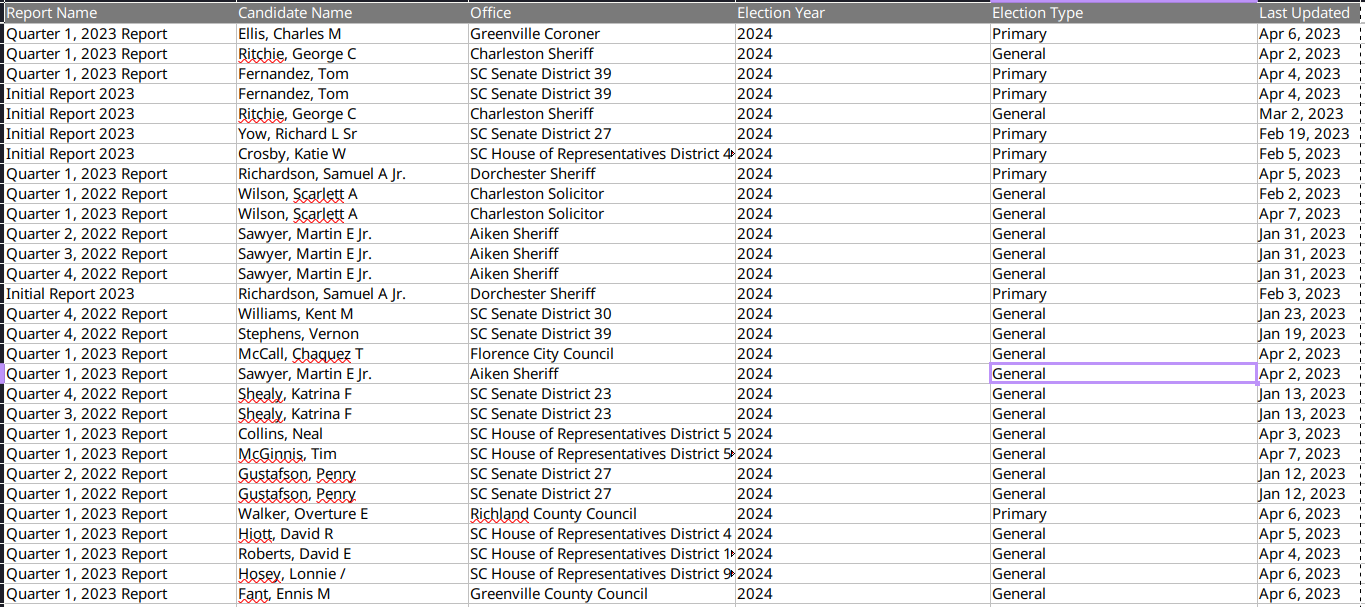

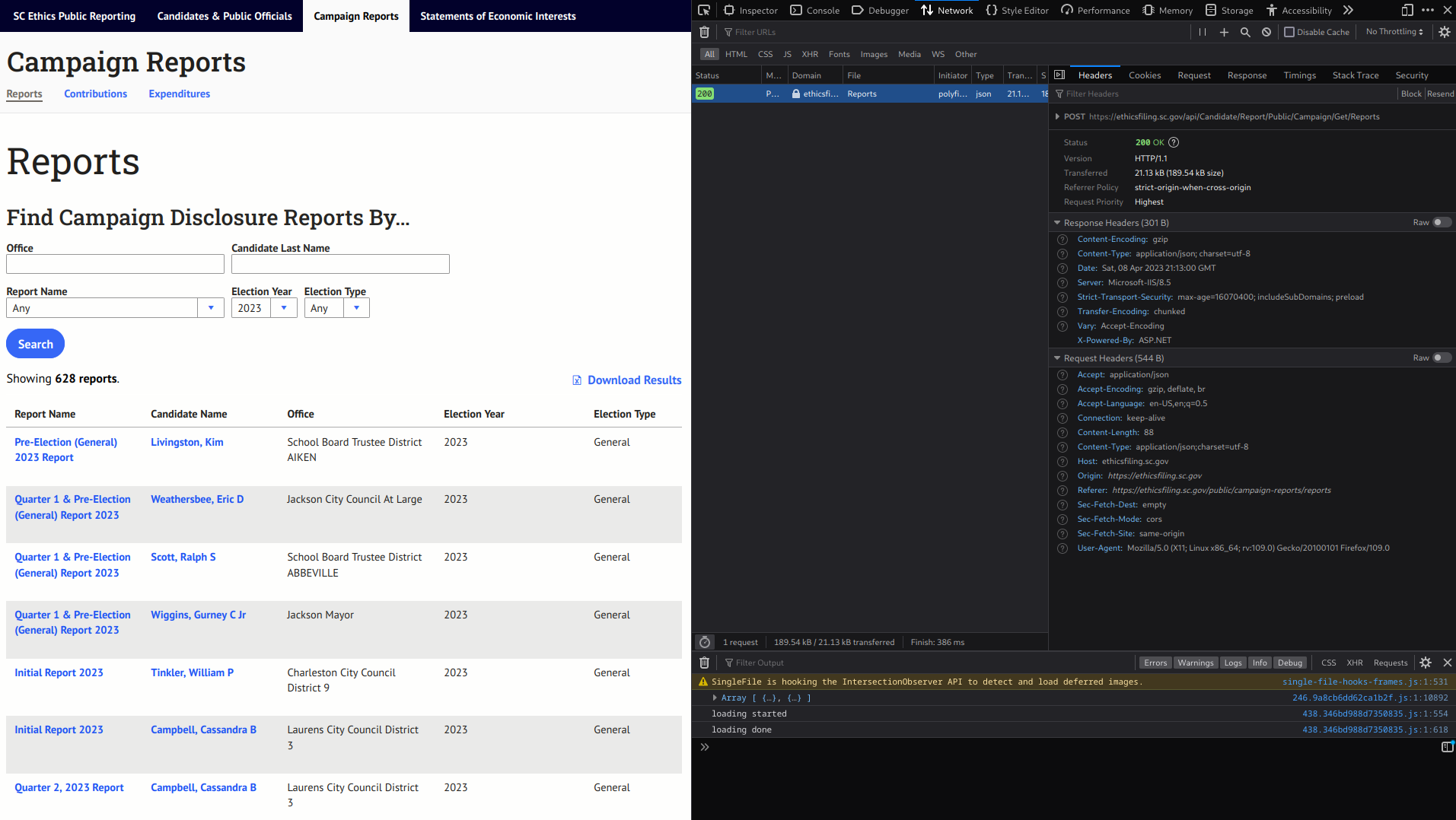

Well, we've got a spreadsheet but there are no links to the actual reports anywhere... That's not what I wanted. So I wondered if there was a way to actually get the links to all of these reports. Thankfully I know that websites have to pull their data from somewhere! Browsers have some pretty neat development tools that make understanding how a website gets its data a little easier.

A website can load in data to the page and use that data inside of the webpage that the user interacts with. In the photo above, the box on the right shows the network requests that the campaign finance website sent to a server somewhere.

Let's see what kind of data the website sent to the server: it says the type of request was a POST request to the URL https://ethicsfiling.sc.gov/api/Candidate/Report/Public/Campaign/Get/Reports containing the following data:

{"candidate":"",

"office":"",

"reportType":"Any",

"electionyear":2023,

"electionType":"Any"}```So let's see what kind of data the server sends back to the browser (note: I'm only including the first few items of the data here because there are more than 600):

[

{

"candidateFilerId": 12734,

"seiFilerId": 2599,

"credentialId": 30880,

"campaignId": 49367,

"reportId": 91107,

"office": "Charleston Mayor",

"reportName": "Quarter 4, 2016 Report",

"candidateName": "Stavrinakis, Leon E ",

"electionyear": "2023",

"electionType": "General",

"lastUpdated": "2017-01-15T17:51:47.343"

},

{

"candidateFilerId": 12734,

"seiFilerId": 2599,

"credentialId": 30880,

"campaignId": 49367,

"reportId": 102867,

"office": "Charleston Mayor",

"reportName": "Quarter 1, 2017 Report",

"candidateName": "Stavrinakis, Leon E ",

"electionyear": "2023",

"electionType": "General",

"lastUpdated": "2017-04-10T20:54:12.927"

},

{

"candidateFilerId": 12734,

"seiFilerId": 2599,

"credentialId": 30880,

"campaignId": 49367,

"reportId": 106007,

"office": "Charleston Mayor",

"reportName": "Quarter 2, 2017 Report",

"candidateName": "Stavrinakis, Leon E ",

"electionyear": "2023",

"electionType": "General",

"lastUpdated": "2017-10-15T19:32:31.97"

}

]So it looks like we can send post requests to the URL using different years to get information about reports from candidates, but is that information useful (e.g. can we use it to make a list of reports with links)? Let's look at a report and see if we can find the information we got back from the server somewhere.

I'll use the search feature to find reports for the 2023 election year for Leon Stavrinakis since we have information for him

So we can see that there is a report that was listed in the response! Let's see where the link for that report goes: https://ethicsfiling.sc.gov/public/candidates-public-officials/person/campaign-disclosure-reports/report-detail?personId=12734&seiId=2599&officeId=49367&reportId=102867

Let's break down each part of that link after the question mark to understand what's happening here:

- personId = 12734 (that ID matches the candidateFilerId from the response)

- seiId = 2599 (that ID matches the seiFilerId from the response)

- officeId = 49367 (that ID matches the campaignId from the response)

- reportId = 102867 (that ID matches the reportId from the response)

{

"candidateFilerId": 12734,

"seiFilerId": 2599,

"credentialId": 30880,

"campaignId": 49367,

"reportId": 102867,

"office": "Charleston Mayor",

"reportName": "Quarter 1, 2017 Report",

"candidateName": "Stavrinakis, Leon E ",

"electionyear": "2023",

"electionType": "General",

"lastUpdated": "2017-04-10T20:54:12.927"

}So now we can build a URL from the data that server sent back to us! That's really useful!

Now that we've done all of this what have we learned? Well, if we do just a little more digging it becomes really obvious that the entire campaign finance reporting system is set up like this: the browser sends a request and the server returns a response with data (not every website does this in a way that is easy to understand). But now that we know the site is just a mediator between us and the underlying data, let's see if we can do something a little more exciting!

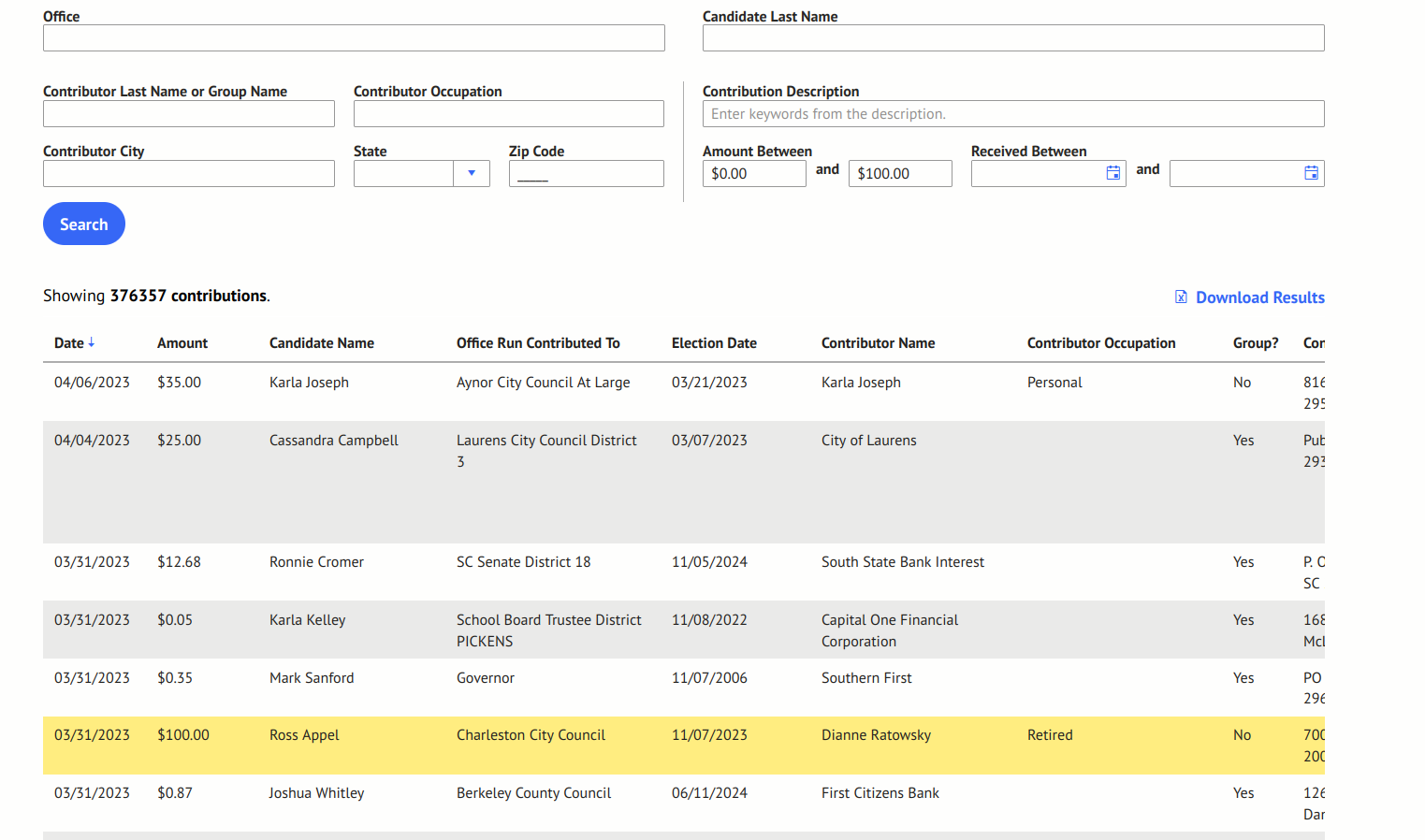

I'm particularly interested in political contributions and the site has info on those. The contribution search tool is a little harder to work with because you have to field in at least one of the fields. Let's see what kind of data we can get using the tool.

Okay that's great, but is there a way to get every single contribution the system knows about? Let's use the network requests tool again to figure out how the browser gets the data to build the table.

{

"amountMin": 0,

"amountMax": 100,

"officeRun": "",

"candidate": "",

"contributorName": "",

"contributorOccupation": "",

"contributionDescription": "",

"contributorCity": "",

"contributorZip": null

}Alright, I'm going to use python to send these requests in order to make things easier to work with.

Here's some code that does what the browser does but instead of setting a reasonable minimum and maximum contribution amount, we set the values to catch every possible contribution.

import requests

url = "https://ethicsfiling.sc.gov/api/Candidate/Contribution/Search/"

payload = {

"amountMin": -99999999,

"amountMax": 99999999,

"officeRun": "",

"candidate": "",

"contributorName": "",

"contributorOccupation": "",

"contributionDescription": "",

"contributorCity": "",

"contributorZip": None

}

response = requests.request("POST", url, json=payload) #this sends the request to the server and stores the response in a variable

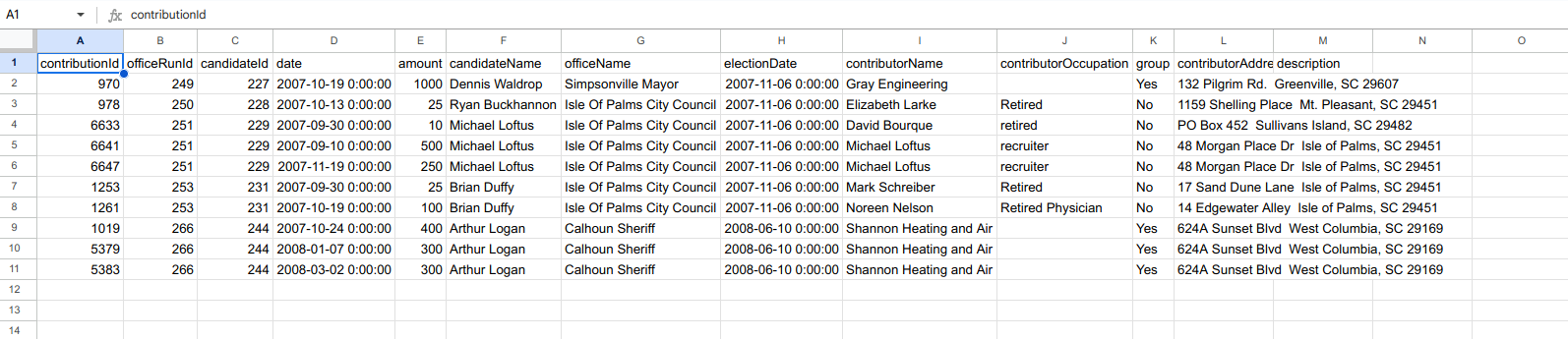

print(response.status_code)In the above code I'm just testing if the server sending back the response requires that the request parameters to be filled in, if not, then we can get all of the data that the system has. A response returns a status code that tells the browser or in this case the programming language if the request was successful. Typically, if a server requires specific parameters to be filled the status code will not be a number between 200 and 299. In the above code, the output of print(response.status_code) is 200. We can do some more checks to see what we've got in the response, but let's just look at the first ten rows

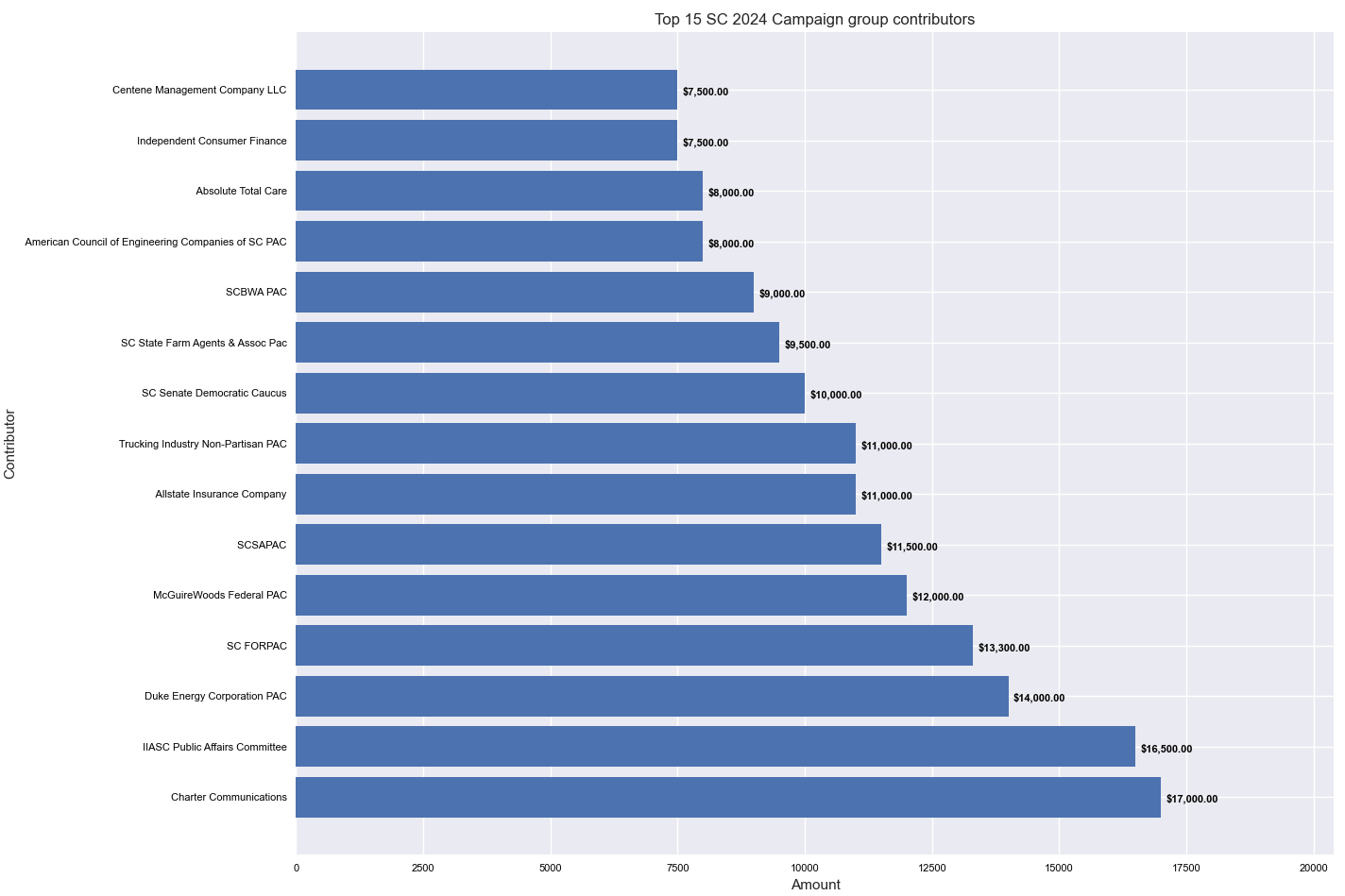

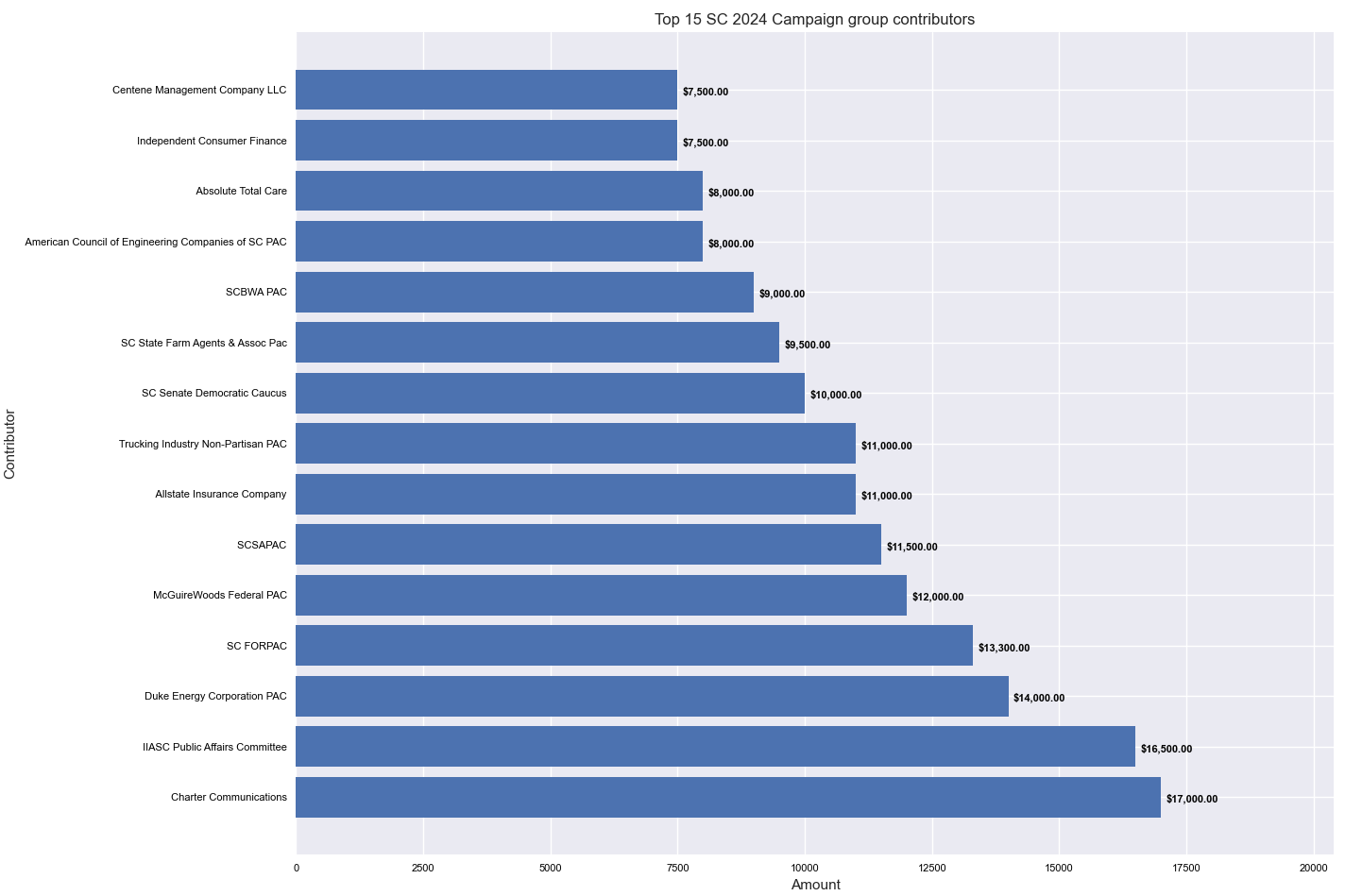

So the data we've got has individual contributions on each line. Let's see if we can make a quick graph of the top 15 contributors to campaigns for elections being held in 2024 that are listed as groups in the system.

import pandas as pd

df = pd.DataFrame(response.json()) #turn the response from the server into a DataFrame

df.year = pd.to_datetime(df.electionDate).dt.year #extract the year from the electionDate

top_15 = df[(df['group'].str.contains('Yes')) & (df.year == 2024)] #filter the dataframe to only contributors labeled as groups and contributions that are for the 2024 election year

.groupby('contributorName') #group by the contributorName

.amount.sum() #total the amount for each contributor

.sort_values(ascending=False).head(15) #sort the values and take the top 15

We can create a table of the contributions using the above code. The table looks like this.

| contributorName | amount |

|---|---|

| Charter Communications | 17000 |

| IIASC Public Affairs Committee | 16500 |

| Duke Energy Corporation PAC | 14000 |

| SC FORPAC | 13300 |

| McGuireWoods Federal PAC | 12000 |

| SCSAPAC | 11500 |

| Allstate Insurance Company | 11000 |

| Trucking Industry Non-Partisan PAC | 11000 |

| SC Senate Democratic Caucus | 10000 |

| SC State Farm Agents & Assoc Pac | 9500 |

| SCBWA PAC | 9000 |

| American Council of Engineering Companies of SC PAC | 8000 |

| Absolute Total Care | 8000 |

| Independent Consumer Finance | 7500 |

| Centene Management Company LLC | 7500 |

I've been pretty code heavy this post so I'll spare you the code to create the graph, but this is what the finalized graph looks like.

And with that we have a graph that can give us insight into how the 2024 campaign cycle is already ramping up.

This is just a small portion of the data that drives the SC Ethics Commission's campaign finance system. There's much here to explore that I have not discussed in this article like the special economic interest reports that candidates must file. I haven't seen anyone use the system in this way and I hope that this post encourages people to start thinking about going beyond what the system provides to the user.

Thanks for reading my first post! If you could share this with other folks who might find it interesting, I'd sincerely appreciate it. Next week's post will be about a rabbit hole I went down while writing a Wikipedia article about the judge that presided over the Murdaugh trial.